bloom

ML art installation based on latent space exploration, in

collaboration with a plant

| Technology | Python, Machine Learning, Touchdesigner, Spout/UDP |

| Collaborators |

Yvonne Fang, Eesha Shetty, Lia Coleman, Michelle Zhang |

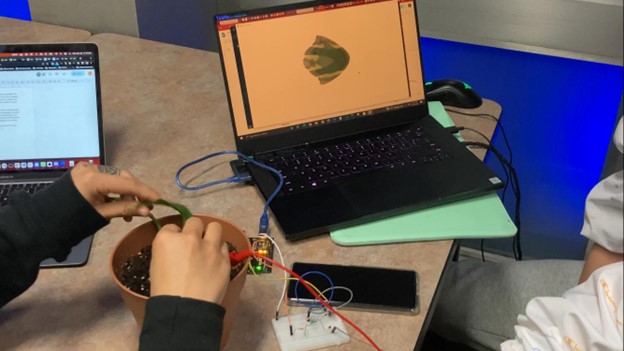

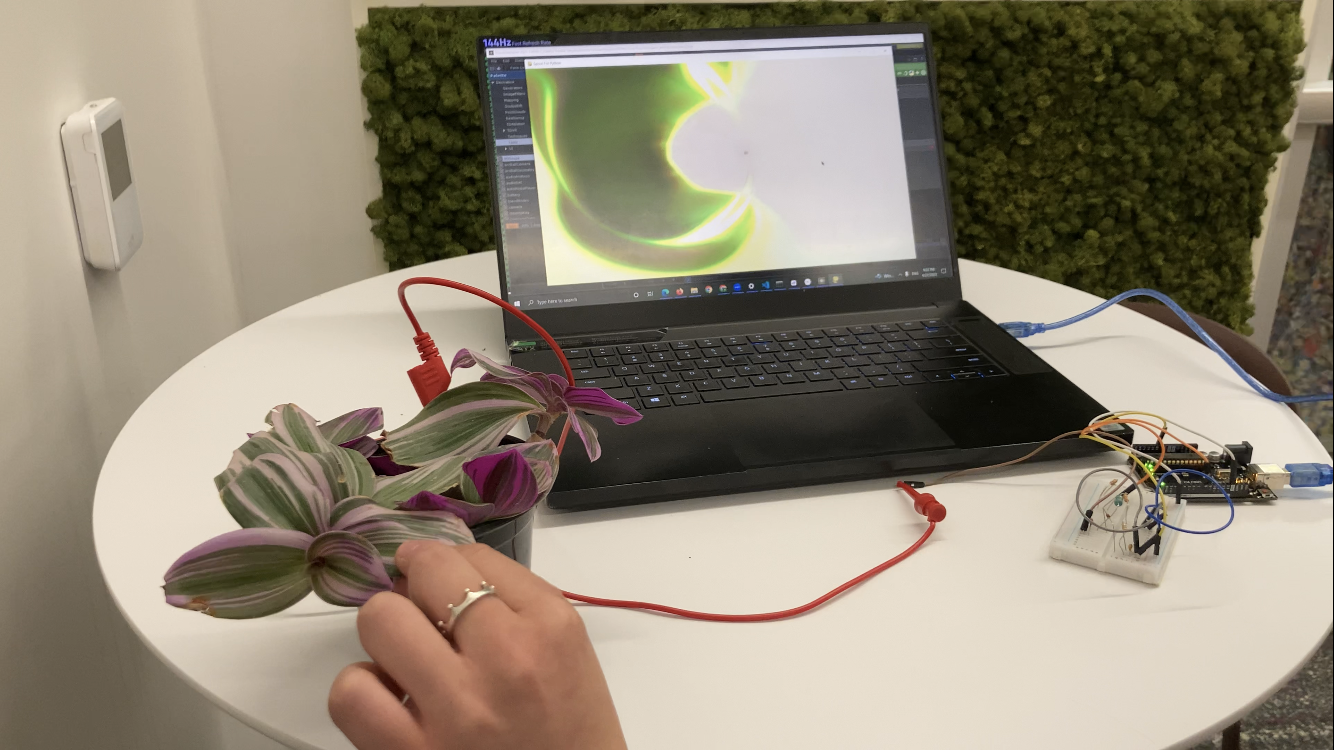

This is an interactive art installation that explores co-creation between humans, plants, and AI, by combining sensory elements with image generation using StyleGAN3. By using sensors placed on a plant's leaves and stems, participants can collaborate with a living organism to create a real-time output of StyleGAN generated visuals of a plant's leaves, which are influenced by both the plant's biological signals and the participant's touch. By giving the plant agency to navigate our fine-tuned StyleGAN's latent space, this project blurs boundaries between human, plant, and machine to create a unique interactive experience.

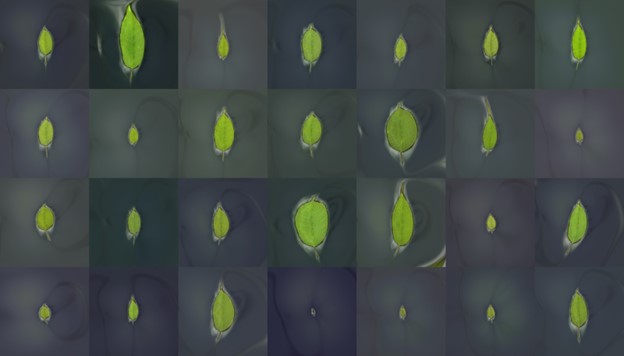

Dataset

StyleGAN3 was fine-tuned using a plant leaf dataset from Instagram (514 photos of leaves taken from the same fallen tree, @alongletter), resulting in intriguing artifacts such as smoky and neon appearances in the generated images. Although they are imperfect checkpoints, possibly due to data augmentation or mode collapse, they are beautiful and unexpected.