Dream Field 2.0 (wip)

Unreal Engine, LLM multi-agent simulation, real-time mesh and texture generation

Dream Field 2.0 is a work in progress, continuing my exploration of AI as a malleable creative material that breaks down the semantic divide between humans and nonhumans, different species and lifeforms, imagining new ways for humans to relate to nature - less athropocentric, but more deeply intwined with the ecosystem we are part of. I also continue to explore AI (LLM)’s potential to align with or fuse with nonhuman forms of intelligence in nature, serving not as a human assistant furthering human interest only, but as a bridge between humans and the natural world. These are important topics to me in an age where the way we live is so detached and distanced from nature.

![]()

My medium and technical pipeline is closely related to my concept - a morphing, ever-evolving virtual world unbounded by static 3D assets and textures, that goes beyond the traditional game engine. Having mainly worked with real-time image generation models such as StreamDiffusion in the past, I went a step further to play around with real-time 3D mesh generation. Below is my experiment fitting an icosphere mesh to a dolphin mesh with a 3D machine learning python library Pytorch 3D, and streaming that morphing mesh into Unreal Engine as a procedural mesh.

![]()

To further visualize and build out the world these morphing creatures will live in, I created an open world inside Unreal Engine, largely based on the first iteration of this installation.

![]()

Then I added real-time AI texturing to these creatures. This is a workflow running StreamDiffusion (a real-time AI image generation model, which is super fast, and can generates up to 30 frames of images per second) and Controlnet inside TouchDesigner, then sending that morphing texture back into Unreal Engine as a video stream, to be applied to the meshes. In the Gif below, you can see the texture morph from monarch butterfly, to a blue jay.

![]()

The next step is have creatures go about their lives and even interact with each other. A first step is adding some AI movements and animation.

![]()

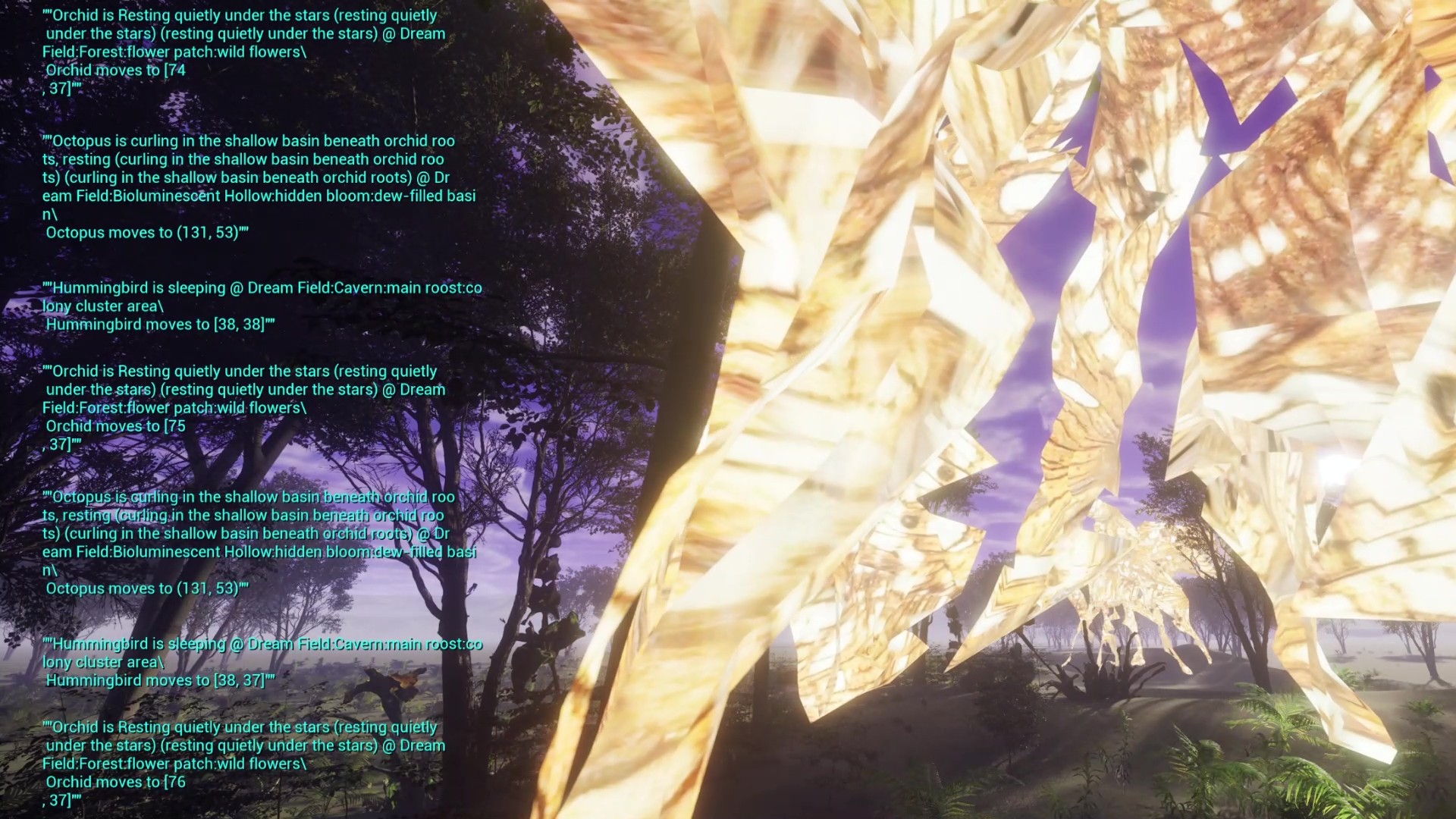

I am also working on “modding” the generative agents village simulation from a Stanford research paper to simulate a nonhuman ecosystem, and use that to power the behavior of creatures inside my virtual world. The process has been thought-provoking - to what extent can those LLM-powered systems prompted to simulate human perception, thought, and behavior, be appropriated to simulate nonhuman creatures’ perception and behavior? Since LLM doesn’t exactly think and plan like humans do, might they even be better at simulating nonhuman agents? Would that be one of the ways AI can serve as a bridge connecting humans closer with the nonhuman world?

![]()

![]()

My medium and technical pipeline is closely related to my concept - a morphing, ever-evolving virtual world unbounded by static 3D assets and textures, that goes beyond the traditional game engine. Having mainly worked with real-time image generation models such as StreamDiffusion in the past, I went a step further to play around with real-time 3D mesh generation. Below is my experiment fitting an icosphere mesh to a dolphin mesh with a 3D machine learning python library Pytorch 3D, and streaming that morphing mesh into Unreal Engine as a procedural mesh.

To further visualize and build out the world these morphing creatures will live in, I created an open world inside Unreal Engine, largely based on the first iteration of this installation.

Then I added real-time AI texturing to these creatures. This is a workflow running StreamDiffusion (a real-time AI image generation model, which is super fast, and can generates up to 30 frames of images per second) and Controlnet inside TouchDesigner, then sending that morphing texture back into Unreal Engine as a video stream, to be applied to the meshes. In the Gif below, you can see the texture morph from monarch butterfly, to a blue jay.

The next step is have creatures go about their lives and even interact with each other. A first step is adding some AI movements and animation.

I am also working on “modding” the generative agents village simulation from a Stanford research paper to simulate a nonhuman ecosystem, and use that to power the behavior of creatures inside my virtual world. The process has been thought-provoking - to what extent can those LLM-powered systems prompted to simulate human perception, thought, and behavior, be appropriated to simulate nonhuman creatures’ perception and behavior? Since LLM doesn’t exactly think and plan like humans do, might they even be better at simulating nonhuman agents? Would that be one of the ways AI can serve as a bridge connecting humans closer with the nonhuman world?