Dream Field

2024, Interactive projection, real-time AI image generation, Unreal Engine

Dream Field is an extended reality experience exploring new possibilities for world-building afforded by

emerging image generation technologies. It investigates the aesthetic qualities of diffusion models,

which operate in dreamlike possibility spaces—known as latent spaces—to provoke more equal, caring

relationships between humans and nature. The piece imagines a speculative AI system trained on nonhuman

data and intentions, as opposed to human-centered interests. This AI system tirelessly maintains a

virtual ecosystem, finely controlling its ecological balance. By stepping in front of this AI system’s

lens—a webcam—the human audience witnesses their face gradually morph into a nonhuman creature that

benefits the ecosystem, in a subtly uncanny real-time video stream. The morph is generated by blending

prompt embeddings (text encoded as numbers) in an image-to-image diffusion pipeline, blurring the

semantic divide between species. This new creature is then ported to the ethereal, shifting landscape,

carrying the story forward.

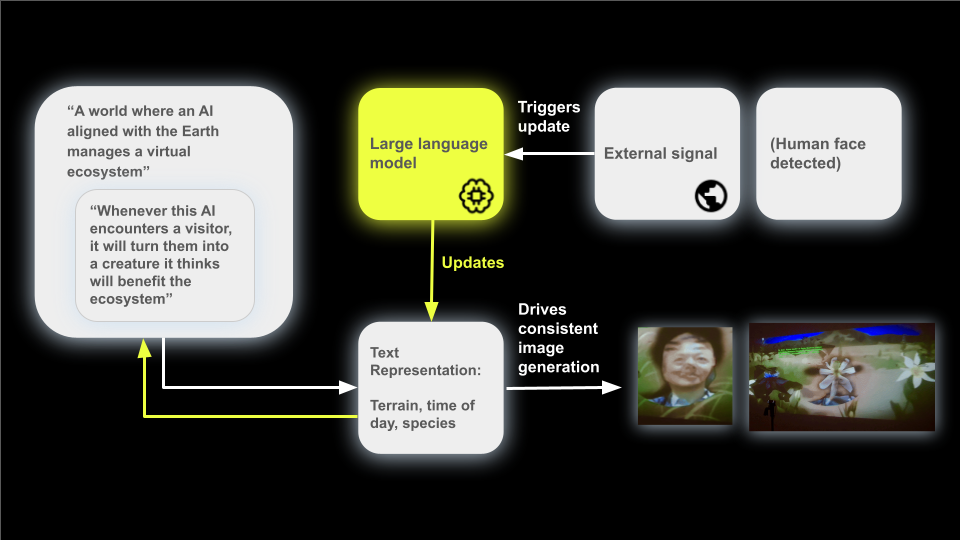

I developed a custom pipeline using real-time AI image generation (StreamDiffusion) to continuously update the scene, which is constructed on top of an open world created in Unreal Engine 5, alongside a Large Language Model-powered narrative generation system. This pipeline renders an open-ended world that responds to real-world data and changes endlessly throughout its lifetime, just like a living ecosystem. It pushes the boundaries of traditional virtual worlds constrained by static 3D assets. By blending 2D and 3D modalities, I create a dream-like, disorienting visual effect, evoking the hallucination phenomenon inherent in generative AI systems. The resulting world represents an idealized version, or a dream of reality, echoing the emotional experience of ecological grief.

I developed a custom pipeline using real-time AI image generation (StreamDiffusion) to continuously update the scene, which is constructed on top of an open world created in Unreal Engine 5, alongside a Large Language Model-powered narrative generation system. This pipeline renders an open-ended world that responds to real-world data and changes endlessly throughout its lifetime, just like a living ecosystem. It pushes the boundaries of traditional virtual worlds constrained by static 3D assets. By blending 2D and 3D modalities, I create a dream-like, disorienting visual effect, evoking the hallucination phenomenon inherent in generative AI systems. The resulting world represents an idealized version, or a dream of reality, echoing the emotional experience of ecological grief.

A detailed description of the technical pipeline is shown below: